Companies are increasingly competing on customer experience. Content discovery and search are important aspects of that experience. Discovery models (such as text search, voice search, visual search, chatbots, natural language search, and faceted search) may overlap in some areas of functionality, but also enhance different use cases and appeal to different types of users. In fact, the idea for this column was triggered by my frequent behavior of taking pictures with the phone camera and checking with in-store personnel about the availability of those products.

Think Outside the Text Box

In the digital world, text-based search has been the dominant model for content discovery since the early days of the internet. With the wide proliferation of smartphones and advances in AI, search that relies on voice and image inputs is gaining traction. Let’s take a look at visual search.

In visual search, you can provide an image as a query input, and images that are visually similar to the given image are returned as results. You can, of course, search the web for “images like this” using Google or Bing, but there are also several interesting visual search use cases in omnichannel commerce and content management.

Visual Search Use Cases

In certain categories of commerce (such as fashion and home decor), it’s often very difficult to describe what you have in mind using a text-search box. But it’s much simpler to take a picture of a dress and look for the same or similar dresses. This is the snap-to-shop use case. In many cases, a picture encapsulates rich metadata, such as product category and product attributes. A picture of a shirt contains search filters such as color, but also collar type, sleeve length, pockets, any labels/logos, style of cut, and more.

Users can directly reach an item they are interested in instead of having to potentially go through multiple pages of search results. You see QR codes on some products to enable this, and you can see how visual search supplements or is even a notch above QR code functionality, because you can snap a picture of the product itself, not only the packaging on which a QR code is printed.

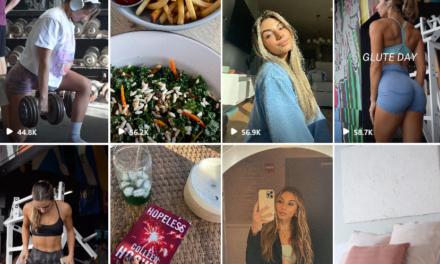

Machine learning techniques are now sophisticated enough to also recognize separate objects within a picture. This enables additional shop-the-look use cases that create cross-sell opportunities. For content publishers such as media companies, visual search represents an opportunity to transform fashion sites into easily shoppable experiences.

How Is Visual Search Implemented?

Visual search functionality has been around for more than a decade, but the capabilities have significantly improved in the last 3 years or so. Behind modern visual search engines are artificial neural network models that are pre-trained on large product catalogs with hundreds of product categories and thousands of stock keeping units (SKUs).

When a user performs an image search, the algorithms determine the product category and then look for matches within that category to improve search accuracy and performance. In a refinement of this approach, both text and image inputs are provided by the user for even better accuracy. Similar to all machine learning models, image search works best when there is a large amount of data in the catalog. Visual search makes sense when there are more than a thousand SKUs. Data, engineering, and scalability considerations are similar to other machine learning projects for image recognition.

Visual search is a fast-growing field. Amazon, eBay, and Pinterest offer visual search functionality on their sites. Beyond the discovery of visually similar items and inspire-me types of product recommendation use cases themselves, the visual search approach also creates an elegant pathway between the offline and online worlds. The entire world is potentially one big shopping mall. Visual search is where the physical and the digital world come together.